Introduction

Machine learning models have become ubiquitous in the computer security domain for tasks like malware detection, binary analysis or vulnerability detection. One drawback of these methods is, however, that their decisions are opaque and leave back the practitioner with the question “What has my model actually learned?”. This is especially true for neural networks which show great performance in many tasks but use millions of parameters in complex decision functions at the same time.

Explainable Machine Learning

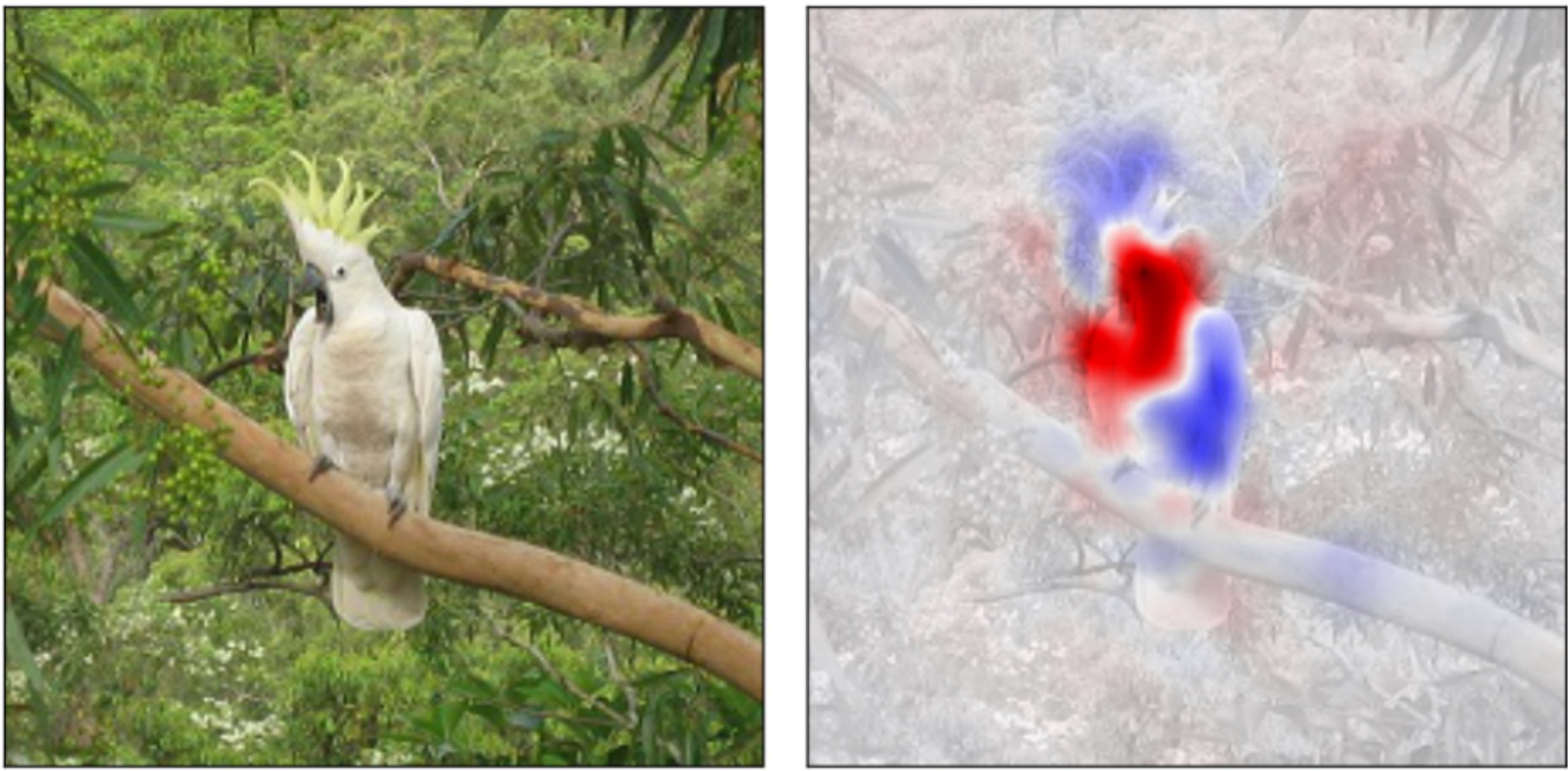

In recent years, the machine learning community has developed different methods [e.g. 1-7] to uncover why neural networks classify inputs the way they do. The majority of these algorithms assign each input feature a relevance value which indicates the importance for the classification result. The following image shows a saliency map (right) that contains the relevance values for the prediction “cuckatoo” of the GoogLeNet network and thus allows a human to see which parts of the input image (left) are important [7].

Due to the availability of various explanation methods it is hard for the practitioner to choose a method, especially since the majority of them have been designed to solve image classification problems. We are among the first to analyze different neural networks proposed to solve computer security problems using techniques from explainable learning.

Comparing Explanation Methods in Security

In our experiments we find that the output of the explanation methods can differ significantly depending on the network architecture and the dataset. At the same time, it is unclear how explanations can be compared in order to decide if one method is “better” than another one. Our paper aims to tackle this problem and introduces different criteria for comparing and evaluating explanation methods in the context of computer security. We evaluate:

-

Descriptive Accuracy: Does the explanation capture the relevant features? We remove the most important features and compare the prediction score.

-

Descriptive Sparsity: Are the explanations sparse? We measure how many features are irrelevant according to the explanation method.

-

Completeness: Some explanation methods can not explain certain samples in a meaningful way. We explore which methods suffer from this shortcoming.

-

Stability: The generated explanations are oftentimes not deterministic. We check check the magnitude of the deviation between multiple runs.

-

Robustness: Attacks on explanation methods emerged recently [8, 9]. We discuss which methods are vulnerable to these attacks.

-

Efficiency: How long does the computation of the exlanations take? We compare the different run-times of the algorithms in detail.

We evaluate six different explanation methods on different network architectures and application domains and find that some approaches perform well for all these metrics whereas others are unsuited for the use in computer security applicaions. In general we recommend the use of white-box methods whenever access to the parameters of the model is available since these methods are highly accurate, do not suffer from incompleteness or instability and generate explanations very fast.

Take a look yourself

We hope to establish the usage of explanationable learning strategies for machine learning methods in the computer security field to judge models not only by their performance but also by the concepts they have learned. To this end we publish example explanations, code and datasets used in our paper.

Examples

- A large portion of the explanations from all methods and datasets in our study can be found here

Models and datasets

- Datasets and scripts for model training can be found in the paper repository

Implementations of explanation methods

- LRP, Integrated Gradients, Gradient * Input and other methods based on a backwards-pass

- LRP for LSTMs

- Lime, Lemna and SHAP

Citation

If you use our code or refer to our results please cite our paper using the following BibTex entry:

@inproceedings{WarArpWre+20,

author={A. {Warnecke} and D. {Arp} and C. {Wressnegger} and K. {Rieck}},

booktitle={2020 IEEE European Symposium on Security and Privacy (EuroS&P)},

title={Evaluating Explanation Methods for Deep Learning in Security},

year={2020}

}

References

[1] S. Bach et al. On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PLoS ONE, 2015.

[2] M. Sundararajan, A. Taly, and Q. Yan. Axiomatic attribution for deep networks. International Conference on Machine Learning (ICML), 2017.

[3] K. Simonyan, A. Vedaldi, and A. Zisserman. Deep inside convolutional networks: Visualising image classification models and saliency maps. International Conference on Learning Representations (ICLR), 2014.

[4] M. T. Ribeiro, S. Singh, and C. Guestrin. “why should i trust you?”: Explaining the predictions of any classifier. ACM SIGKDD International Conference On Knowledge Discovery and Data Mining (KDD), 2016.

[5] S. M. Lundberg and S.-I. Lee. A unified approach to interpreting model predictions. Advances in Neural Information Proccessing Systems (NIPS), 2017.

[6] W. Guo et al. LEMNA: Explaining deep learning based security applications. ACM Conference on Computer and Communications Security (CCS), 2018.

[7] L. M. Zintgraf et al. Visualizing Deep Neural Network Decisions: Prediction Difference Analysis. International Conference on Learning Representations (ICLR), 2017.

[8] X. Zhang et al. Interpretable Deep Learning under Fire. USENIX Security Symposium, 2020

[9] K. Dombrowski et al. Explanations can be manipulated and geometry is to blame. Advances in Neural Information Proccessing Systems (NIPS), 2019